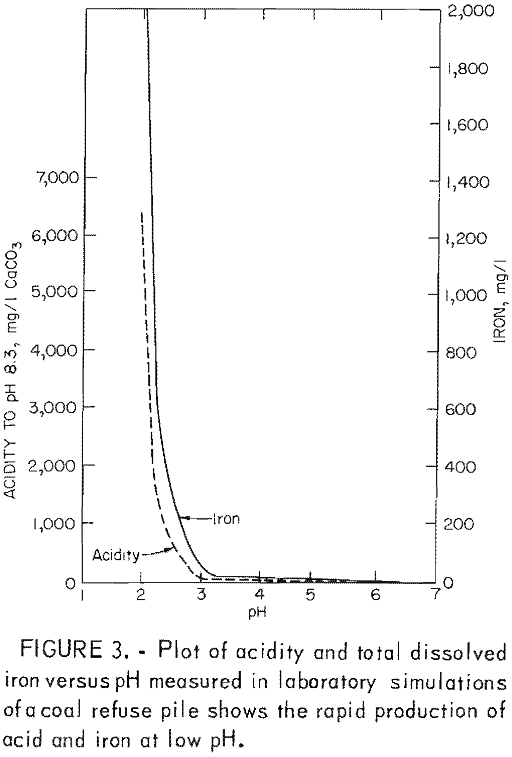

Table of Contents

Acid Mine Drainage from underground coal mines and coal refuse piles is one of the most persistent industrial pollution problems in the United States. Pyrite in the coal and overlying strata, when exposed to air and water, oxidizes, producing ferrous ions and sulfuric acid. The ferrous ions are oxidized and produce an hydrated iron oxide (yellowboy) and more acidity. The acid lowers the pH of the water, making it corrosive and unable to support many forms of aquatic life. The iron oxide forms an unsightly coating on the bottom of streams, and further limits the ability of aquatic life to survive in streams affected by acid mine drainage.

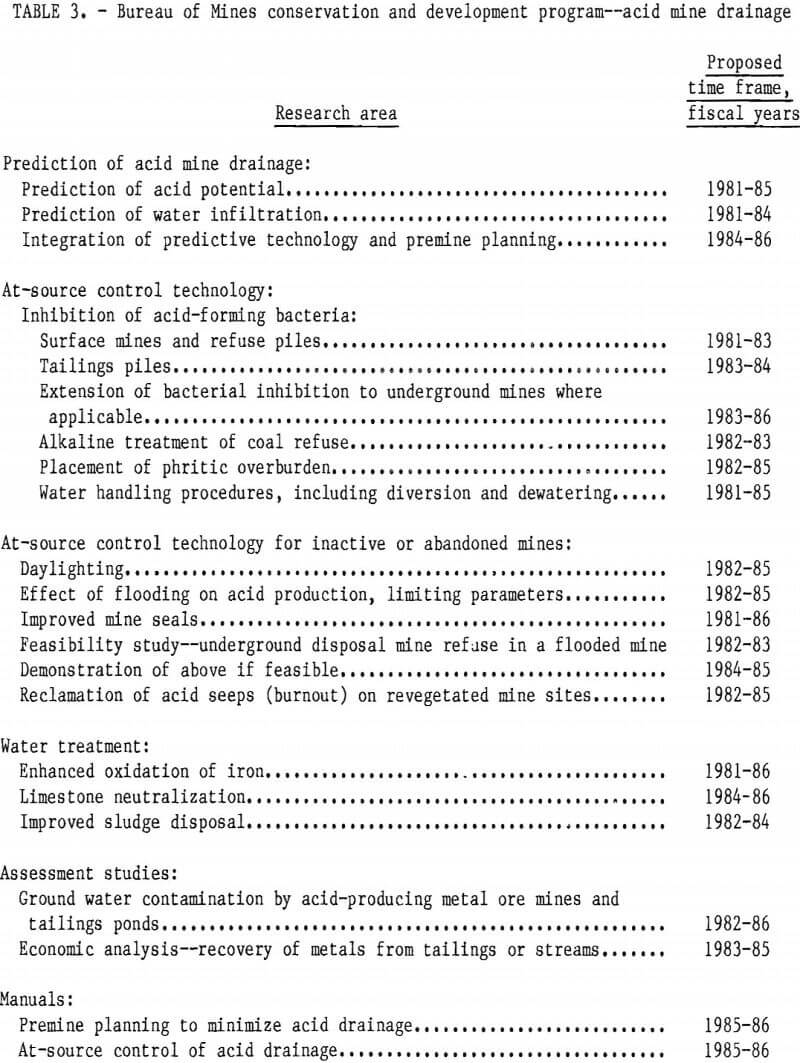

Before coal is mined, very little of the pyrite is exposed to the conditions necessary to produce acid drainage. The mining and coal cleaning process exposes the pyrite to surface or ground waters and allows pyrite oxidation to occur. A ton of coal containing 1 pct pyritic sulfur has the potential of producing 33 pounds of yellowboy and over 60 pounds of sulfuric acid. However, the rate of acid production varies, and abandoned mines and refuse piles can produce acid drainage for more than 50 years. The drainage, if discharged into surface streams or ponds, constitutes an extensive, expensive, and persistent

environmental problem (fig. 1). Federal law now requires that water discharge from active coal mines have a pH between 6 and 9 and places limits on the total iron, manganese, and suspended solids. Controlling acid mine drainage from active mines usually requires expensive water treatment and the necessity of handling very large volumes of water. Controlling drainage from abandoned mines is even more difficult due to location, water volume, and the general limitation on public funds available for work in this area. The Bureau of Mines research program in acid mine drainage addresses the problems of both active and abandoned mines.

In 1936, it was estimated that more than 9 million tons of sulfuric acid were discharged annually from coal mines into the streams of Pennsylvania. Now, although Federal and State regulations specify water quality standards for coal mine discharges, acid mine drainage remains a problem. The most comprehensive survey of the extent of acid mine drainage pollution was conducted by the Federal Water Pollution Control Administration in 1967. Drainage basins throughout the Appalachian Region, in Pennsylvania; West Virginia, Maryland, Ohio, Kentucky, Virginia, Tennessee, Alabama, and Georgia, were sampled for various water quality parameters including acidity, to determine the extent of water pollution caused by coal mine drainage. At that time, 10,500 miles of streams were significantly degraded by coal mine drainage, and further analysis of the data showed that approximately half, or 5,740 miles was affected by acid mine drainage.

Since that time no Federal agency has undertaken a survey of this scale; current mine drainage assessments are restricted to statewide estimates. Direct comparisons between current State data and those collected in the 1967 study cannot be made, because of differences in the intensity of sampling and variation in the criteria used to classify a stream as degraded. However, current water quality data, obtained from State environmental agencies of the Appalachian Region, do indicate that there has been little overall improvement in streams affected by acid mine drainage.

In Pennsylvania, which annually produces approximately 82 million tons of coal, there are 2,795 miles of major streams that fail to meet current water quality standards. Drainage from abandoned mines contributes to at least 70 pct of the total. Although projected figures for 1983 indicate a slight decrease in stream miles that will not meet water quality standards, no decrease in the drainage pollution related to mining is expected.

In Ohio, where annual coal production was nearly 42 million tons, a 1979 study conducted by the Ohio Environmental Protection Agency indicates that acid mine drainage affects 1,075 miles of streams. In Maryland, where annual coal production was just over 2-½ million tons, the Maryland Bureau of Mines currently estimates that 450 miles of streams are affected by acid mine drainage, much of it related to mining in neighboring States. In Tennessee, where annual coal production is nearly 11-½ million tons, acid mine drainage was estimated to affect 994 miles of streams, according to the Division of Water Quality Control of the Tennessee Department of Public Health. In Kentucky the Department for Natural Resources and Environmental Protection, Division of Abandoned Lands, has compiled data for 1978-80 which show that of a total of more than 2,100 miles of streams within the Eastern Coalfield affected by coal mine drainage, only 77 miles are acid. The low proportion of acid streams in this area of Kentucky is apparently related to the abundance of limestone in the coal-bearing rocks.

Although rigorous comparisons cannot be made, the more recent data indicate that the extent of acid mine drainage pollution is of the same order of magnitude as that measured in the 1967 study. Government regulation has done much to upgrade the quality of discharges from active mining operations, but most acid mine drainage comes from abandoned mines, which are not regulated. In spite of increased efforts to control it, acid mine drainage continues to be a major problem.

Present regulations stipulate that acid drainage from active mines and refuse piles must be chemically treated for as long as it is discharged from the mine or pile. These mandatory standards have generated research needs in the following areas: More efficient treatment methods, the disposal of sludge produced by treatment, engineering methods to reduce acid formation and other at-source methods of control, control of acid formation and erosion from spoil piles, and improved water management techniques. In addition, there is no systematic, cost-effective method of reducing the acid load from mines already abandoned and from mines to be abandoned in the future, although an estimated 80 pct of acid mine water comes from abandoned mines and spoil piles. This area particularly requires a concentrated research effort if there is to be significant improvement in the quality of many streams.

Chemistry of Formation and Control

Prevention and/or control of acid mine water depends on an understanding of the chemical, biological, and geological factors that influence its formation, which is best described as a series of chemical reactions. Acid mine water is produced by the oxidation of the pyrite (FeS2) normally present in coal and the adjacent rock strata. The oxidation of pyrite is usually described by the reaction below in which pyrite, oxygen, and water form sulfuric acid and ferrous sulfate:

2Fe2 + 7O2 + 2H2O = 4H+ + 2Fe2+ + 4SO4²-.

Oxidation of the ferrous iron produces ferric ions according to the following reaction:

2Fe²+ + ½ O2 + 2H+ = 2Fe³+ + H2O.

When the ferric ion hydrolyzes, it produces an insoluble ferric hydroxide (yellowboy) and more acid:

Fe³+ + 3H2O = Fe(OH)3 + 3H+.

Although this summary is correct at pH above about 4.0, it is only one of three different reactions systems, which vary in significance with pH. Also significant in the oxidation of pyrite is a bacterium, Thiobacillus ferrooxidans.

At near-neutral pH (stage 1), the rates of oxidation by air and by T. ferrooxidans are comparable. This stage is typical of freshly exposed coal or refuse. Despite the high concentration of pyrite, the rate of oxidation either by oxygen or by T. ferrooxidans is relatively low, and the natural alkalinity of ground water may effectively neutralize the acid formed at this stage.

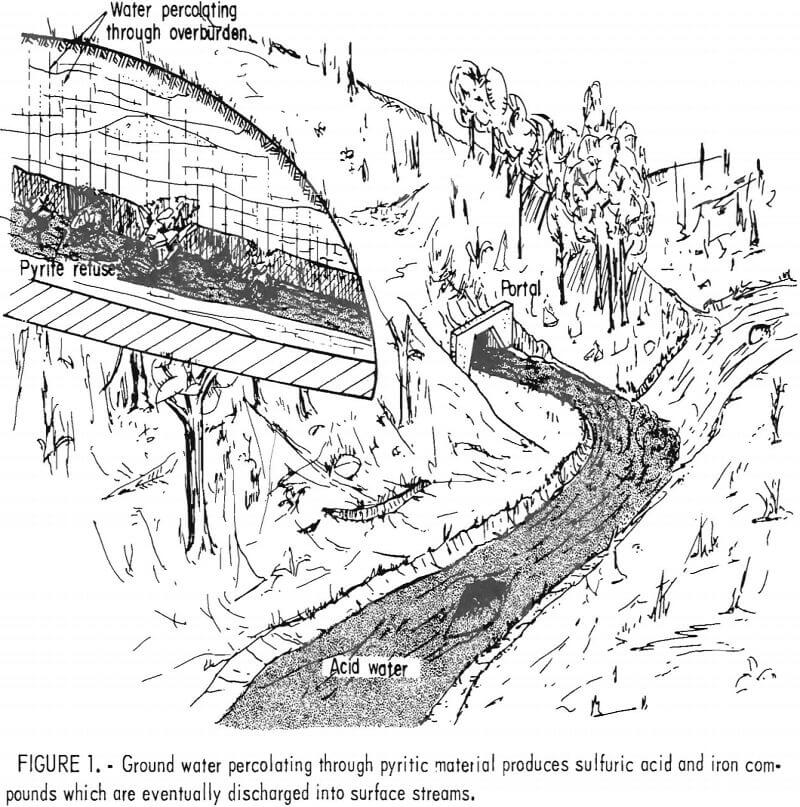

When the neutralizing capacity of the environment is exceeded, acid begins to accumulate and the pH decreases (stage 2). As the pH decreases, the rate of iron oxidation by oxygen also decreases. But at the lower pH of stage 2, the rate of iron oxidation by T. ferrooxidans increases. The action of the bacteria causes increased acid production, which serves to further lower pH (fig. 2).

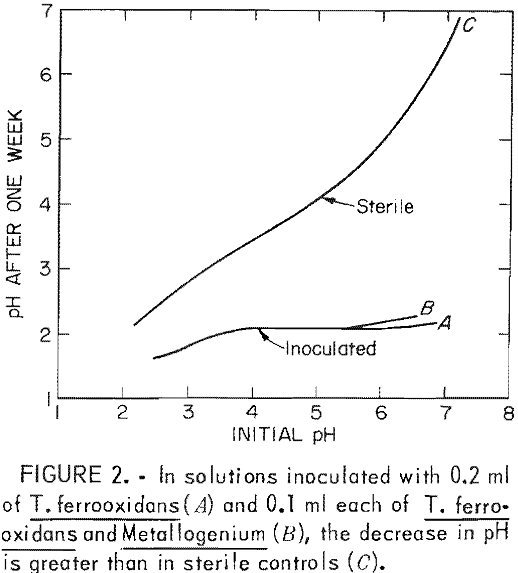

As the pH in the immediate vicinity of the pyrite falls to less than 3, the increased solubility of Iron and the decreased rate of Fe(OH)3 precipitation affect the overall rate of acid production (stage 3). At this point, ferrous

iron is oxidized by T. ferrooxidans and the ferric ion in turn oxidizes the pyrite:

FeS2 + 14Fe³+ + 8H2O = 15Fe²+ + SO4²- + 16H+.

In this third stage, the rate of acid production is high (fig. 3) and is limited by the concentration of ferric ions. Inhibition of T. ferrooxidans would prevent ferric oxidation of pyrite and should therefore reduce acid production by at least 75 pct.

When untreated acid mine drainage is mixed with surface waters, it has a deleterious effect upon the receiving stream, usually making it unsightly and inhospitable to most forms of aquatic life. Most organisms cannot tolerate an acid environment. Also, the ferrous iron in acid mine drainage consumes the oxygen in a stream, and the precipitated ferric hydroxide covers the streambed, limiting the oxygen available to benthic organisms. The acidity and total solids adversely affect aquatic life, and heavy metal ions present in some mine waters have an increased toxicity in acid

solutions. In streams severely polluted with acid mine drainage, there are usually no complex aquatic plants, no fish, few if any benthic invertebrates, and only a few species of algae. In some cases, the invertebrates and algae that can survive grow to nuisance proportions.

When acid mine drainage is produced in an active mine, environmental laws require that it meet minimum standards before it is discharged into surface streams. According to the Federal Water Pollution Control Act, water from coal mines must have a pH between 6 and 9 and must contain no more than an average of 3.5 ppm and a maximum of 7 ppm iron, no more than an average of 2 ppm and a maximum of 4 ppm manganese, and no more than 70 ppm total suspended solids.

Standard acid mine drainage treatment methods involve neutralization of the acid by the addition of a base, oxidation of ferrous iron in an aeration tank or pond, and precipitation of iron compounds in a settling pond. Manganese, unless present in high concentrations, is usually removed with the iron.

The chemistry of the basic treatment method is relatively straightforward. Neutralization is the reaction of the acid with a base:

H2SO4 + Ca(OH)2 = CaSO4 + 2H2O.

If limestone is used as the neutralizing agent, the reaction is

H2SO4 + CaCO3 = CaSO4 + H2O + CO2.

To remove the ferrous iron, the neutralized water is aerated to produce ferric ions, which react with the base to form insoluble ferric hydroxides:

Fe2(SO4)3 + Ca(OH)2 = Fe(OH)3 + CaSO4.

With limestone as the neutralizing agent, the reaction is

Fe2(SO4)3 + 3CaCO3 + 3H2O = 2Fe(OH)3 + 3CaSO4 + 3CO2.

Other alkaline agents can be used, but because of cost, ease of handling, or other environmental effects, lime is most commonly used. Other methods that have been suggested for treating acid mine drainage include reverse osmosis, ion exchange, and flash distillation. However, these are not treatment methods per se, but methods for producing potable water that were suggested for acid mine drainage only where there is no alternative source of potable water. With these methods a concentrated acid brine or sludge must be disposed of, and because of technical problems and high cost, processes like these are net commonly considered as reasonable alternatives to neutralization.

Bureau of Mines Acid Mine Drainage Research

Methods to reduce the pollution from ferruginous acid water from coal mines and spoil banks usually involve either reducing the rate of acid formation or increasing the efficiency of mine water treatment. Although acid mine drainage has been a problem for many years, there have been few significant innovations in its prevention and control. The Bureau of Mines was involved in much of the acid mine drainage research conducted before 1970. The Bureau performed analytical studies to determine the acidity, composition, and corrosivity of acid mine water. Sources of and variations in acid mine drainage, the effect of pyrite content, rock dusting, and chemical neutralization also were investigated. In addition, the Bureau of Mines was a major contributor to the development of mine seals during the 1930’s.

During the 1970’s the Bureau of Mines had only a limited acid mine drainage program as other Federal agencies, such as the Environmental Protection Agency (EPA), assumed primary responsibility for water pollution research. Because of the continuing problems in acid mine drainage control, the Bureau of Mines has recently developed a comprehensive program of acid mine drainage research. This program is directed toward several areas, including improved prediction of acid potential, improved mine planning, at source control of acid formation, improved reclamation, improved water treatment, techniques to control acid drainage at abandoned mines and waste piles, and assessment of ground water contamination in major mining districts.

Mine Sealing

In 1928 it was reported that mines sealed by natural caving produced less acid than unsealed mines in the same locality. It was assumed that limiting the amount of air and water entering the mine reduced the rate of acid formation. After a 1-year field study, an extensive mine sealing program was undertaken in 1933 through the Works Progress Administration and the Civil Works Administration. Records indicate that sealing did produce favorable results in West Virginia, Ohio, and Pennsylvania. However, the Federal sealing programs were short term and did not provide funds for maintaining the seals. Lack of maintenance, natural deterioration, vandalism, and subsequent mining combined to reduce the long-term effectiveness of the mine sealing program.

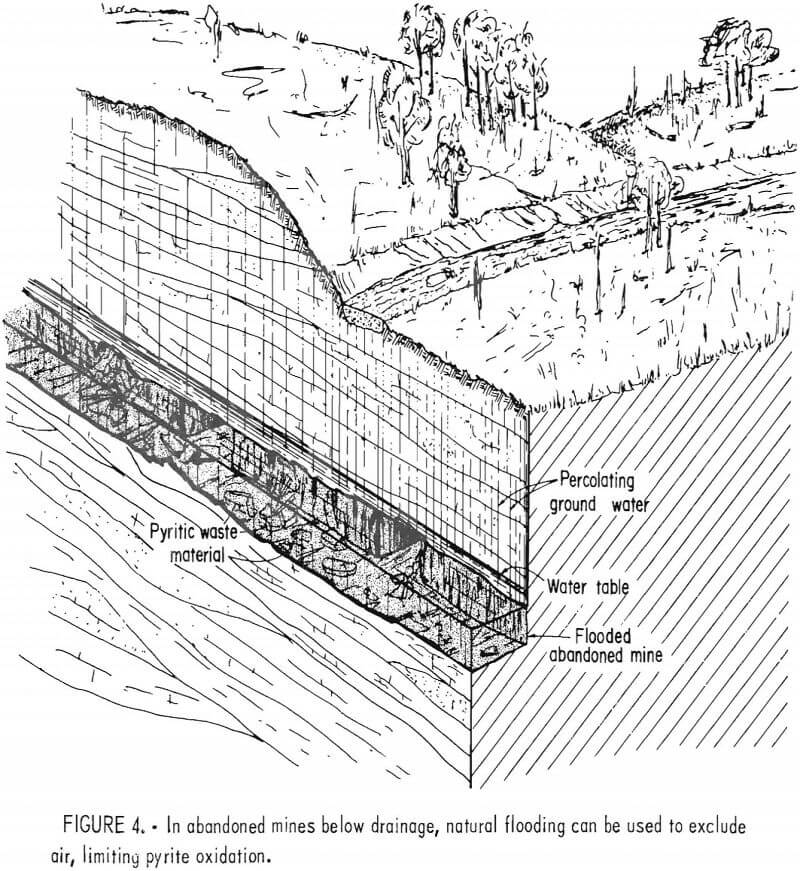

Despite the lack of long-term monitoring, mine sealing is considered a standard method for reducing acid formation from coal mines. In mines located below drainage, natural flooding is used to cover the pyritic material and exclude air (fig. 4). When the air supply is eliminated, it is assumed that the pyrite is no longer oxidized. If the water pool is stable and the additional hydraulic pressure creates no downdip drainage problems, flooding is considered adequate.

This effect is currently being observed in the Northern Field of the Pennsylvania Anthracite Region, where flooded workings underlie approximately 43,000 acres. The Bureau of Mines is currently studying the hydrology and geochemistry of this large mine pool complex because the pool is well established, having started to form over 30 years ago. Owing to its size and flow characteristics, water draining from the mines remained acid until only a few years ago, casting some doubt on the effectiveness of flooding. However, our study has shown that the water in the mine pools has now recovered and is in fact slightly alkaline.

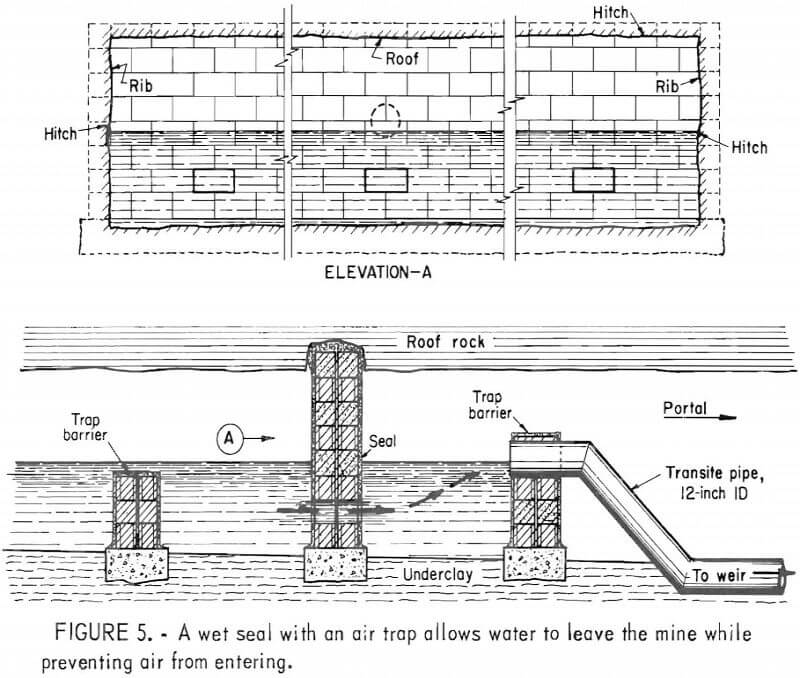

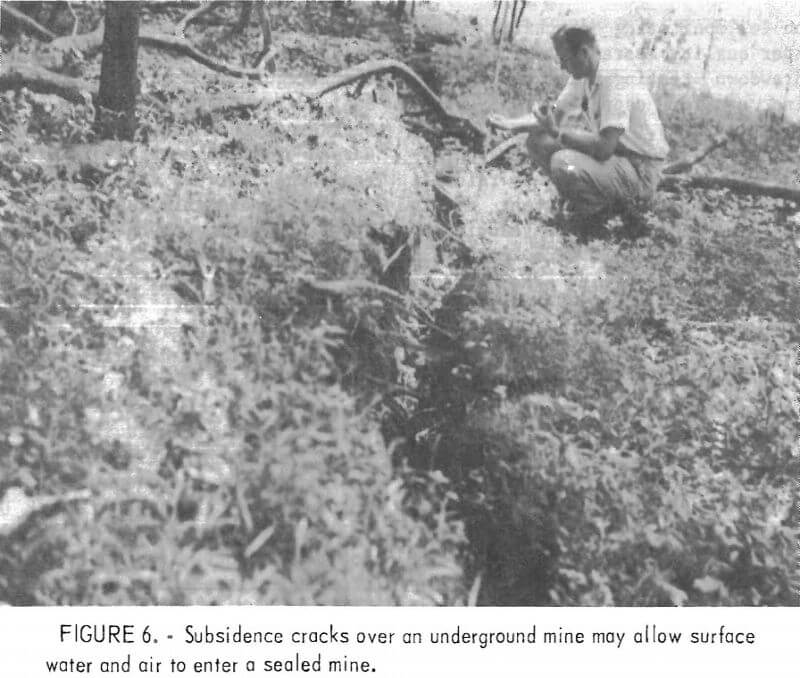

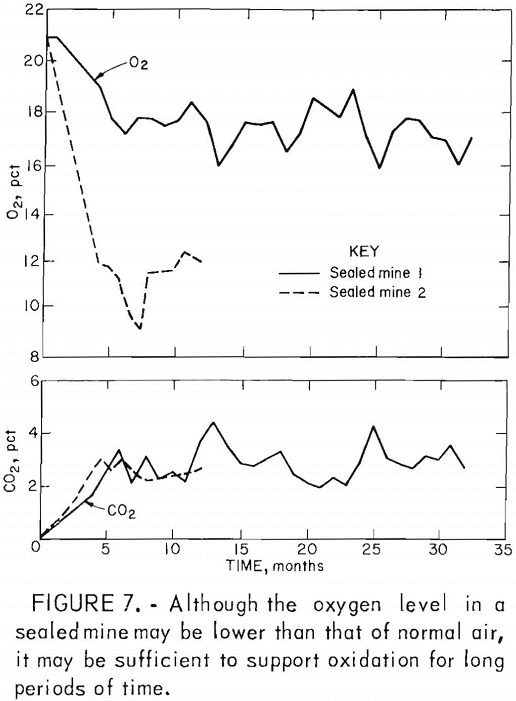

In deep mines above drainage, flooding is generally ineffective owing to seepage through surface fractures and the tendency of the water to migrate to other discharge points. In such mines, conventional seals are airtight and/or watertight masonry walls hitched into the roof, bottom, and ribs of mine openings (fig. 5). Subsidence fractures that extend from the mine through the caved overburden to the surface serve as open pathways for the migration of air and the drainage of surface water (fig. 6). To effectively seal these mines, it is also necessary to backfill and grade all fractures that extend from the mine to the surface. Although aerial photography and infrared scanning can be used to locate zones of high permeability, this process is expensive and not sensitive enough to delineate relatively small subsidence fractures. Indiscernible joints and subsidence fractures can affect the overall effectiveness of a mine sealing project by allowing a mine to “breathe” (fig. 7). Monitoring by the Bureau of Mines has shown a general correlation between the oxygen level in the mine, the differential pressure across mine seals, and changes in the barometric pressure.

Because most mine sealing evaluations are based on short-term observation, the Bureau is now evaluating the long-term effectiveness of mine sealing in several areas. For example, at Moraine State Park grouted double bulkhead seals were used in an area where an artificial lake was built over 10 years ago. In this area, water discharged from sealed areas has a total mean acid load of 206 lb/day 10 years after sealing, compared with 540 lb/day before sealing. The discharge rate varies between 250 and 500 gpm, and the total iron has a mean value of 53 lb/day at present, compared with 46 lb/day prior to sealing. Over the 10-year period the variation in discharge rate, acidity, alkalinity, and total iron has declined, indicating that a mine pool has been created as a result of sealing. In the Highland Fuel Mine area (Butler County, Pa.) water samples are obtained from discharge points and from observation holes behind the seals. The discharge from the sealed area varies from 25 to 80 gpm, and the pH of the water is approximately 4. Total iron in the discharge averages 12 to 14 ppm. Water samples from the mine pool behind the seals have an average pH above 6 and a higher total iron content.

Recent inspection of wet, dry, and hydraulic and/or bulkhead seals constructed 13 years ago in abandoned mines near Elkins, W. Va., has shown that five of the clay seals have deteriorated, allowing acid seepage to kill vegetation on the slopes below the seal. Yellowboy precipitate has restricted the drainage opening of one wet masonry block seal. Water quality in at least one stream receiving discharge from the sealed mines has not improved significantly since the mines were sealed.

The Bureau’s evaluation of mine seals installed approximately 10 or more years ago has indicated several serious problems with conventionally constructed seals. First, completely sealing a mine above drainage is very difficult and may be very expensive. Complete sealing requires that all zones of high permeability be located and sealed. The usual procedure is to locate subsidence fractures, cover the area with a compacted clay blanket, and regrade it. To be effective, this procedure requires that all fractures be located and that no further subsidence occur. The evidence indicates that surface sealing is rarely effective for long periods. Another problem with mine sealing is that masonry seals require continued maintenance. Even if seals are adequately built and maintained, the improvement in water quality directly attributable to the sealing process has not been adequately determined.

To improve the effectiveness of mine sealing, the Bureau of Mines is studying sealed mines to determine factors controlling changes in water quality and is developing techniques to build more efficient, less expensive seals. In

addition to monitoring discharge rates and water quality parameters, at some sites drawdown testing will be used to determine aquifer capacity and water level fluctuations. Subsidence areas will be surveyed during and after drawdown testing. The collection of rainfall data and the use of fluorescent dye to determine the direction and velocity of ground water flow in the mine pool will also be used to determine the factors that affect water quality when mines are sealed conventionally.

The Bureau also is involved in a demonstration of pneumatic stowing of limestone as a method of producing more effective mine seals at a cost equal to or less than that of conventional seals. Observations of mine seals to date indicate that seals constructed of cement block and grout do not substantially improve water quality, may not effectively contain impounded water, and are subject to failure and/or leakage. The construction of a competent seal requires that it be permanently attached to the roof, ribs, and floor. Frequently the failure of conventional seals is due to collapse of weak strata over the seal. Pneumatic stowing produces a highly compacted limestone plug which supports roof and ribs. Movement of surrounding strata will cause further compaction of the limestone. Concrete or other additives to the limestone can be used to produce a stronger seal. If appropriate, the limestone seal can be constructed to allow low flows of mine water. If the water is only slightly acid, passage through the limestone can effectively neutralize it. In a field trial of pneumatic stowing, the limestone seals were found to be satisfactory in terms of the safety of construction, the strength of the seal, and the cost. Long-term monitoring of these seals is planned to determine their effect on water quality.

In deep mines, the construction of mine seals limits the water discharge rate and creates a mine pool covering some or all of the pyritic material. If inundation is to be an effective method of controlling acid drainage, outcrop barrier pillars must be capable of withstanding the hydrostatic head created by the impounded water. In general, Federal and State regulations on mine closure do not deal directly with criteria for outcrop barriers used to flood the mine. A Bureau of Mines contract report details the factors that affect the competency and stability of outcrop barriers. According to the study, an outcrop barrier should be wide enough to prevent seepage, have sufficient overburden to prevent a blowout, and provide a stable slope. Curtain grouting, compartmentalized barriers, and relief wells can be used to reinforce outcrop barriers.

In mines that have been successfully inundated, water quality is often good in the mine pool but generally acid after seeping through the barrier. Use of a relief well system, as suggested in the contract report, would remove water from the mine pool prior to its exposure to pyrite-bearing coal and would also limit the hydrostatic heed that the outcrop barrier would have to withstand. Properly designed outcrop barriers could reduce acid formation in abandoned mines and allow maximum coal removal.

Oxygen may also be a limiting factor in coal refuse piles and some surface mines. The Bureau of Mines is currently using soil gas probes to study oxygen diffusion characteristics in coal refuse piles; preliminary results indicate that pyrite oxidation is limited to a near-surface layer. A similar study will soon be initiated in an abandoned surface mine. Followup research will concentrate on current reclamation methods to determine if any reduce oxygen diffusion or result in the consumption of oxygen, thus limiting acid formation.

Acid Mine Drainage Treatment

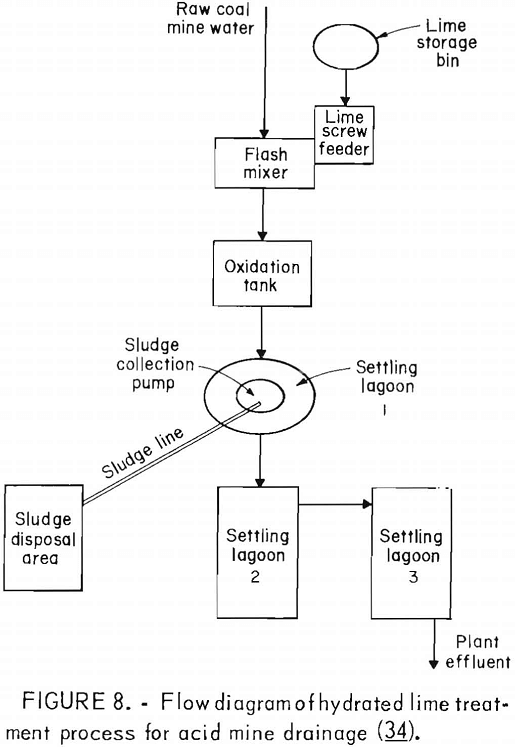

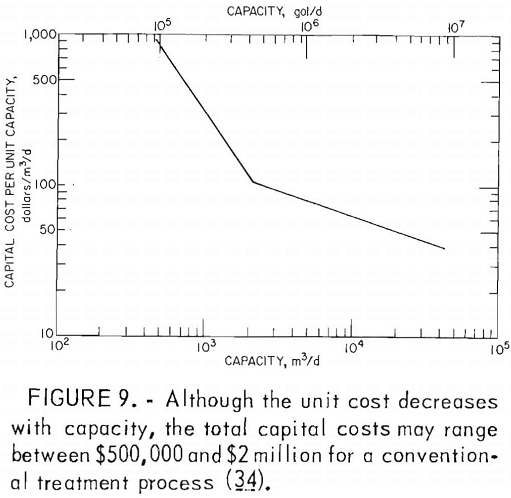

Many factors influence the quantity and quality of water handled by a mining operation, but it is not unusual for a single mine to treat 1 million gallons of acid water per day. Water from coal mines is usually treated by chemical neutralization. At present, lime is the most commonly used neutralizing agent. Generally, a small volume of mine water is added to powdered lime to produce a slurry which, added to the raw mine water, raises the pH to between 7 and 9. The water is aerated to oxidize the ferrous iron and is then transferred to a settling pond for precipitation of the iron compounds (fig. 8). Although commonly used, lime neutralization has several inherent problems. Lime is a caustic material and can produce water with an unacceptably high pH. Lime treatment produces a flocculant precipitate that does not form a dense, easily handled sludge. Research by EPA failed to produce any economical improvement in sludge disposal methods. The Bureau is evaluating the technology and economics of current sludge disposal practices to determine the most promising area of research. Sludge disposal problems, and high cost (fig. 9) are the principal reasons for seeking improved acid mine drainage treatment methods.

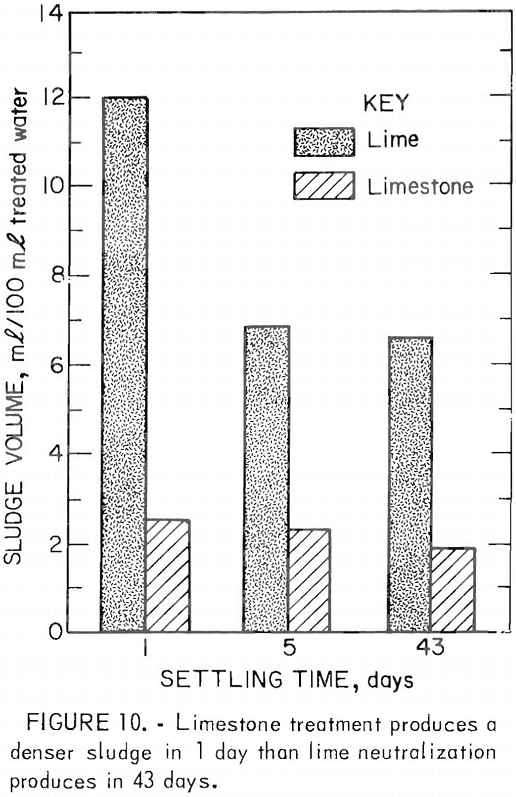

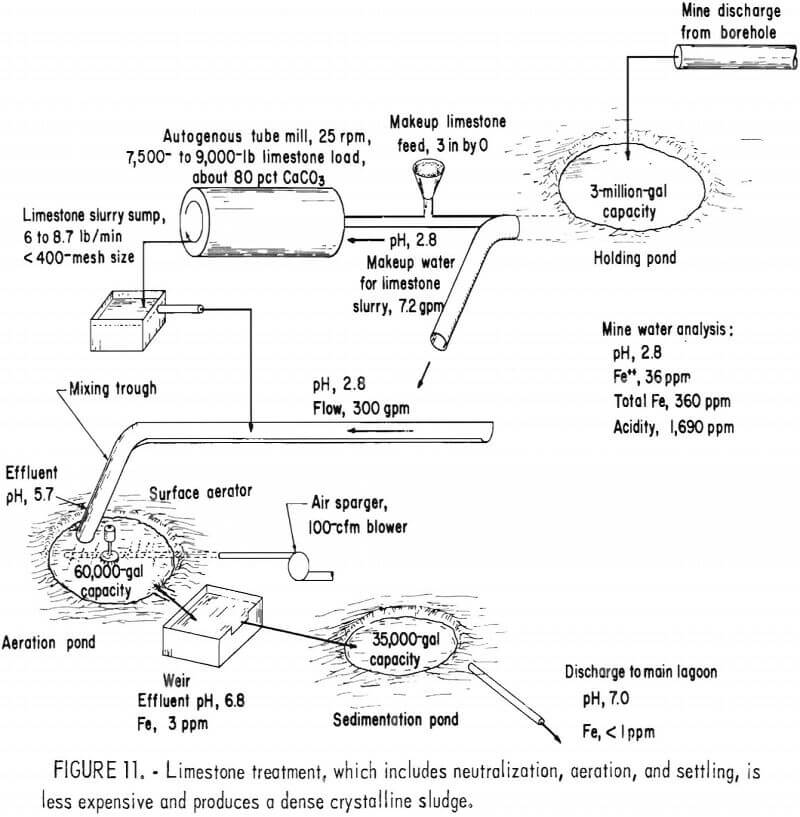

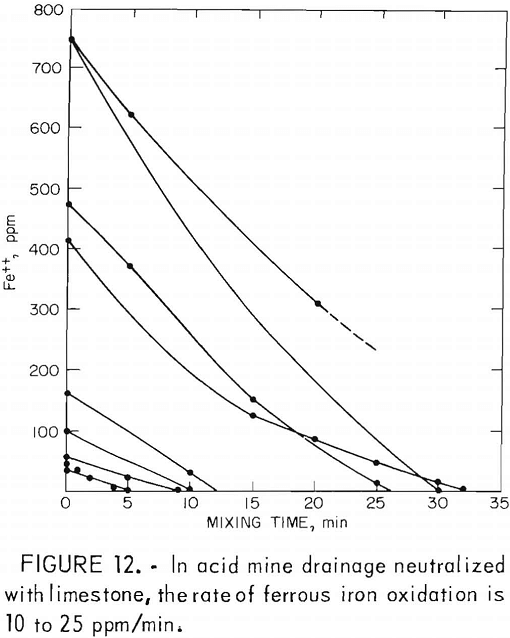

As an alternative treatment method, the Bureau has developed a neutralization process using limestone instead of lime. Limestone is readily available and costs less than lime, and limestone treatment produces a dense crystalline sludge (fig. 10). In the Bureau treatment process, very fine (5 to 10 µm) limestone is produced by wet autogenous grinding in a tube mill. The limestone slurry is mixed with the acid mine drainage, and the drainage is aerated to remove carbon dioxide and to oxidize the ferrous iron (fig. 11). The gypsum-iron oxide sludge separates from the drainage in settling ponds. Although limestone treatment has the advantages of lower material costs, simplicity of plant design and operation, the use of a less hazardous material, and a denser sludge, its use is limited by the rate at which ferrous iron is oxidized. With limestone neutralization, the pH of the treated

drainage is between 6.8 and 8.0 and the iron oxidation rate ranges from 10 to 25 ppm/min (fig. 12).

To minimize the power and land requirements for aeration, the Bureau is investigating the use of catalysts to increase the rate of ferrous iron oxidation at low pH. A laboratory study by the Bureau of Mines indicated that activated carbon was an effective catalyst in the oxidation of ferrous iron. In batch tests, the ferrous iron content of acid mine drainage flowing through an aspirated column of activated carbon was reduced from over 700 ppm to less than 10 ppm in approximately 1 minute. In these tests, approximately 5,000 ml of the acid drainage was passed through the 200 grams of activated carbon before the catalytic effect was noted. During this period the pH of the effluent was higher than that of the mine drainage feed. Since the increase in iron oxidation rate was observed after the pH of the drainage showed substantially no change, the onset of the catalytic effect was attributed to acid conditioning the carbon.

A pilot-scale field study was conducted to study the effect of activated carbon on the ferrous iron oxidation rate using a three-stage continuously stirred reactor. Continuous agitation of the carbon in the mine water provided more efficient water-carbon contact and prevented channeling and/or fouling. A weir device at the top of the reactor controlled carbon loss. In this system the ferrous iron oxidation rates were not as high as those reported in the previous laboratory study. Acid conditioning the carbon did not significantly affect the iron oxidation rate. When the pH of the influent water was adjusted to 5, the rate of iron oxidation increased, but not to the levels reported in the laboratory study. When comparing the rate data from the field and laboratory studies, it was considered possible that the high iron oxidation rate reported was due to the growth of iron-oxidizing bacteria on the carbon rather than to direct catalysis by the carbon. T. ferrooxidans is an aerobic bacterium which derives energy from

the oxidation of ferrous iron in acid mine drainage. It is possible that the activated carbon provides a substrate to which the bacteria adhered. However, recent laboratory tests showed no significant bacterial activity or catalytic action.

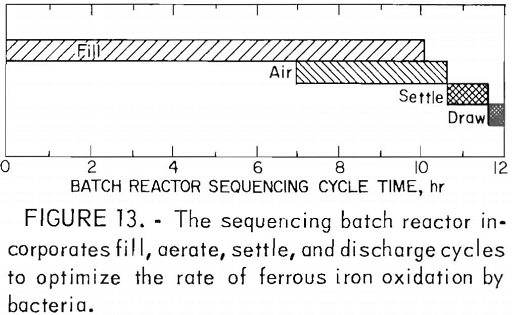

The Bureau is also funding an investigation of the growth of iron-oxidizing bacteria on clay particles (5,000 mg/l) in a sequencing batch reactor. In this test bacteria are grown on clay particles in a ferrous sulfate solution containing appropriate inorganic nutrients. The reactor, a system of fill, aerate, settle, and discharge cycles (fig. 13), is designed to produce an effluent with a Fe²+ concentration of less than 10 mg/l. The retention of bacteria on the clay particles is dependent upon the ferrous Iron content. If the Fe²+ content of the system decreases to 5 mg/l, the bacteria are desorbed from the clay and removed from the reactor in the discharge cycle. Using the sequencing batch reactor, a ferrous iron oxidation rate of approximately 290 mg/l/hr has been reported for estimated bacterial populations of approximately 5 x 10 7 cells/ml.

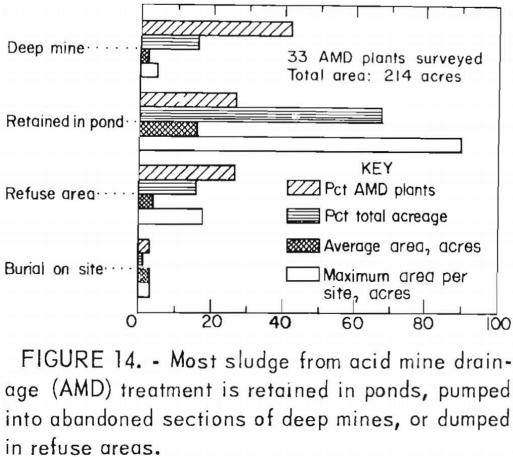

The disposal of sludge produced by neutralization of acid mine drainage is expensive and potentially one of the most persistent problems in its treatment. As a preliminary step, the current sludge disposal practices of 33 treatment plants were assessed to determine the magnitude of sludge production, current disposal practices, and constraints on their usage. The primary sludge disposal problem involves the large volume of sludge generated and the scarcity of approved disposal sites. The four most commonly used

methods of sludge disposal are deep mine disposal, retention in ponds, incorporation in coal refuse piles, and surface burial. The amount of land required for disposal is usually the determining factor in the overall cost, although transportation, equipment, maintenance, and labor are also significant (fig. 14). The development of methods to efficiently and economically increase the density of the sludge would substantially reduce the cost of sludge disposal.

Although there are other methods potentially applicable to acid mine drainage

treatment, such as ion exchange, reverse osmosis, and flash distillation, the Bureau is not currently investigating these. Most of these processes are high in cost, have high power requirements, and produce a highly concentrated toxic brine or sludge which is more difficult to dispose of than the original sludge. The Bureau’s research program in treating acid drainage from active mines is directed toward development and demonstration of a complete limestone treatment system, including a suitable catalyst for rapid oxidation of ferrous iron and sludge disposal.

The Bureau is also investigating a low-cost, low-maintenance treatment system for small streams contaminated by acid drainage from abandoned mines. Conventional treatment is not applicable because of the low flow rate and the remote rural location. It has been observed that bogs containing sphagnum moss and/or cattails remove iron by ion exchange and precipitation. Subsequent neutralization at limestone outcrops completes an effective natural treatment system. Artificial duplication of this natural process could improve water quality in many small streams.

The Bureau will test the practicality of duplicating this natural treatment system at a low-flow acid drainage discharge point in Northern Appalachia.

The system will include an artificial sphagnum moss bog followed by limestone rubble. The system is designed so that the drainage will pool in the moss, but will not raise the upstream water level enough to allow the water to flow around the treatment system. Monitoring the pH, Eh, temperature, iron and sulfate concentrations, and total acidity of the stream above and below the artificial bog will determine its effect on water quality. The monitoring will extend over at least 6 months so that the effect of uncontrollable parameters such as ambient temperature, rainfall, and stream flow rate can be determined. If this low-cost, low-maintenance system proves to be effective, it could be used to restore many small streams in rural areas.

At-Source Control of Acid Mine Drainage

Of the millions of dollars spent on acid mine drainage each year, the major portion is spent on treatment. But treatment is not the best solution to most acid mine drainage problems. Treatment has the disadvantage of being necessary for as long as the acid discharge continues and thus requires manpower, surface facilities, and a sludge disposal area indefinitely.

Since acid drainage results from the oxidation of pyrite associated with coal and overburden strata, limiting the rate of pyrite oxidation would reduce the amount of acid formed. T. ferrooxidans normally catalyzes the pyrite oxidation and accelerates the initial acidification of freshly exposed coal and overburden. Inhibiting bacterial activity, therefore, would limit the rate of acid production and, in combination with proper reclamation, would reduce substantially the total amount of acid produced. Of the multitude of potential bactericidal agents, certain biodegradable anionic surfactants (detergents) have been found to control T. ferrooxidans in an economical and environmentally safe manner.

Field tests were recently conducted by the Bureau of Mines on both active and abandoned coal refuse piles in West Virginia. Sodium lauryl sulfate was applied at rates of 20 to 55 gal of 30-pct solution per acre, with a dilution range between 1:100 and 1:1000. Application rates were based on laboratory adsorption tests; dilution rates were dictated by site characteristics. At each site, drainage pH rose from approximately 2.5 to 5.5 or higher, with similarly dramatic decreases in acidity, iron, and sulfates.

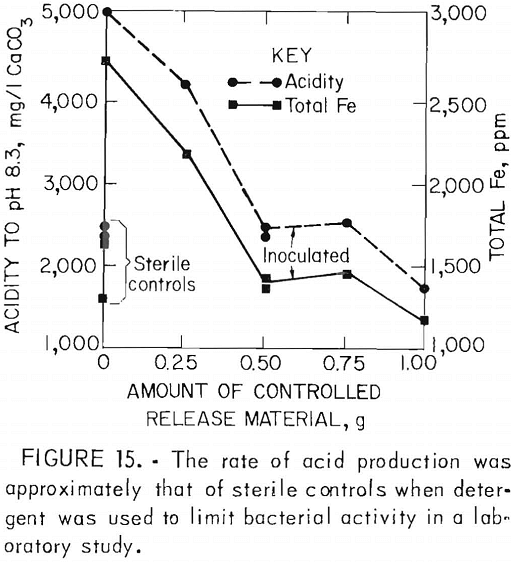

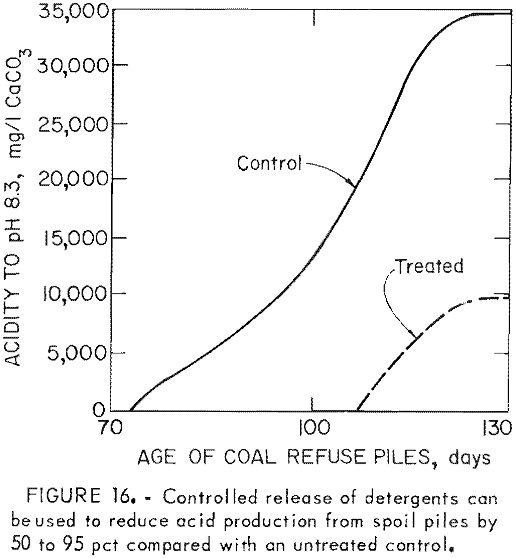

One disadvantage of the detergents is that their effectiveness is limited; re-population of the bacteria typically occurs in 3 to 4 months. To provide long-term control, the surfactants have been incorporated into rubber pellets which can be applied early to a refuse pile or pyrite mine spoil; These pellets gradually release the surfactant into infiltrating rain water. In laboratory studies (fig. 15) and pilot-scale tests (fig. 16), controlled release of sodium lauryl sulfate reduced acid production 50 to 95 pct. Full-scale field tests are now in progress.

Another potential approach to at-source control of acid production requires the establishment of an alkaline environment. As already discussed, pyrite oxidation is

slow at near-neutral pH. A source of alkalinity such as lime, if added to recently exposed pyritic material, will retard or prevent acidification. The Bureau of Mines is currently investigating the possibility of reestablishing a radically alkaline environment, albeit temporarily, to determine if this will slow pyrite oxidation to the point where limestone will be sufficient to maintain near-neutral pH.

Water handling procedures for underground coal mines generally combine gravity flow and collection with pumping from sumps to surface treatment and/or disposal areas. To minimize the formation of acid water, properly placed sumps and pumping systems can reduce the time the water is in contact with pyritic materials. The size and location of sumps is also governed by the ability to discharge water on the surface with minimum power.

Since on the average it requires 0.5 kwhr to pump 1,000 gal of water against a 100- ft head, gravity drainage and an efficient pump are most cost effective. The capacity of water treatment facilities depends upon flow rate and the concentration of pollutants. In designing a treatment system, using infiltration control and efficient water handling to reduce the amount of water flowing through the mine and minimize the amount of acid formed can result in substantial savings in water treatment costs.

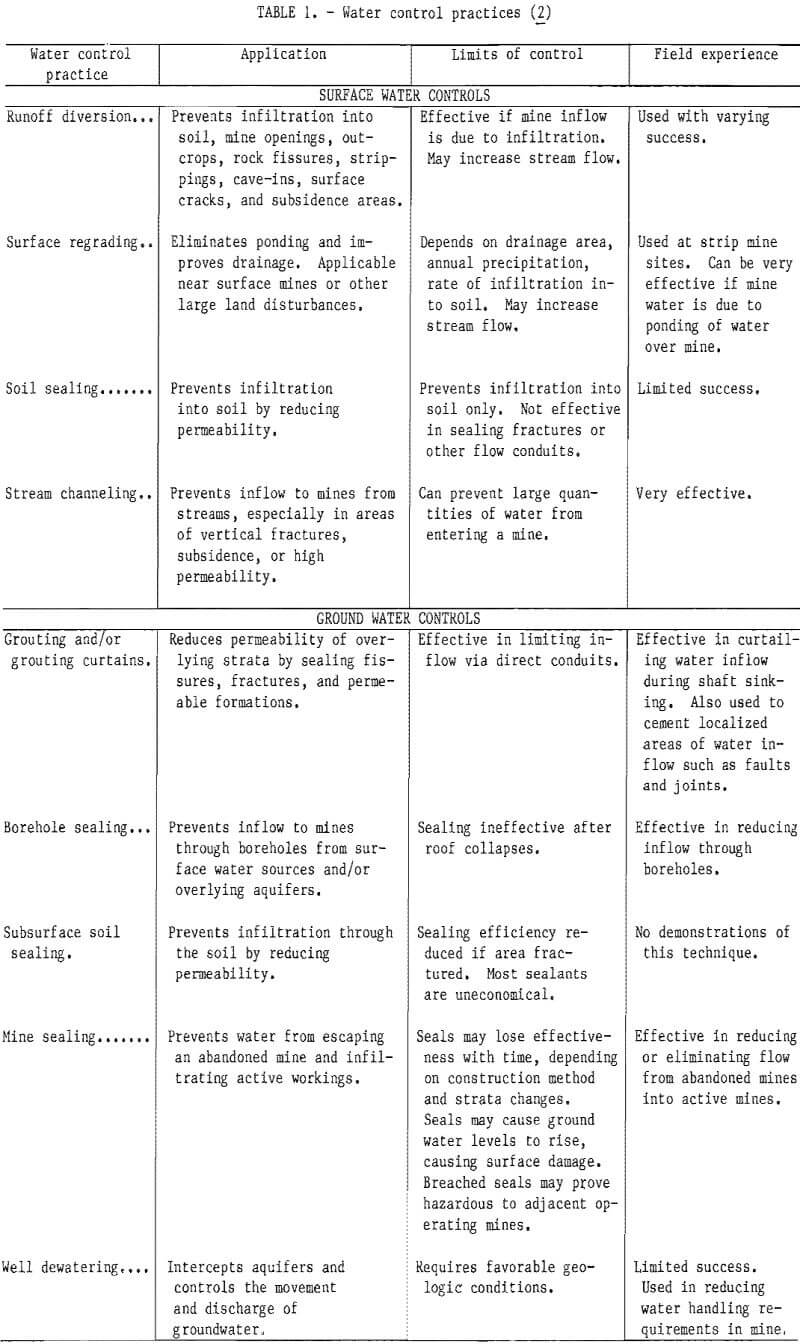

A recent Bureau of Mines contract study examined current state-of-the-art methods of water diversion and overburden de-watering in Appalachian bituminous coalfields. It was found that most water enters underground mines either through water-bearing strata in contact with the coal seam, from surface seepage, through faults and fractures, or from abandoned workings. If surface water is the source, runoff diversion, regrading, soil sealing, and streambed modifications are possible control methods. The influx of ground water may be controlled by reducing the permeability of overlying strata, sealing abandoned mines, and well de-watering in advance of mining. Although all of these methods have been tried to some extent (table 1), their successful application depends largely upon geologic and hydrologic conditions. In general, the standard approach to handling the influx of water into underground mines consists of collecting the water and pumping it back to the surface. At present it appears that water diversion and overburden dewatering are, at best, supplements to traditional water handling procedures. Such methods have the advantages of helping to control large influxes of water that could affect production and reducing the cost of water treatment.

In coal mines where fracture-dominated inflow is localized, intercepting water entering through faults and fractures may be feasible. Water flowing through such fracture zones is essentially surface water and would not normally require treatment. The Bureau has awarded a contract to conduct a pilot study on controlling fracture-dominated inflow. The study will develop design criteria for, and determine the cost effectiveness of, intercepting nonacid water flowing through localized fractures and trans¬porting it to the surface. Economic and engineering analysis of this process should determine if it would reduce the cost of treatment substantially.

Coal storage piles and refuse piles may also be sources of ground water contamination. Rainfall percolating through such piles may leach acid and/or other toxic ions and carry them into ground water. The Bureau has awarded a contract to assess the extent of ground and surface water contamination from coal storage piles. Methods to prevent contamination of ground water include surface collection, impoundment, and treatment of runoff water. Preliminary cost estimates and a discussion of beneficial and adverse effects will be included.

Another area of concern is the effect of large-scale lignite mining in western Tennessee on ground water resources. At present there are few data available on the effects of mining upon ground water in the alluvial plain, in both shallow and deep aquifers. To gather data, four wells and two pits have been dug to determine ground water levels, movement, and chemistry. A contract report will assess the hydrologic impact of lignite mining.

Summary and Recommendations

The Federal Water Pollution Control Act of 1972 set effluent standards for coal mines, requiring treatment for acid mine drainage. Although these standards have been in effect for almost a decade, there has not been significant improvement in the water quality of many streams affected by mine drainage. This lack of improvement is related to the amount of acid drainage flowing from abandoned mines.

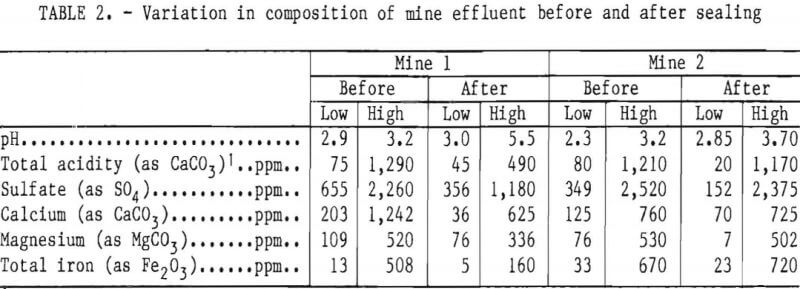

It has been known for years that air, water, bacteria, and pyrite are essential elements in acid production, yet at present there is no standard method or program for limiting the formation of acid mine drainage. Controlled release of anionic surfactants appears to be applicable to near-surface sources of acid production but not to long-term treatment of underground mines. Mine sealing, which is supposed to limit acid production in abandoned mines, has been only marginally successful (table 2). Bureau of Mines studies indicate that surface reclamation and seals at mine openings do not retard the passage of air into a mine. In many cases, seals used to flood a mine or the surrounding strata cannot withstand the continuous hydraulic pressure, resulting in failure of the seal or seepage around it. The Bureau is now evaluating mine seals in several areas in order to determine relevant chemical, geological, and engineering parameters and to determine the long-term effect of mine sealing on water quality.

Mine sealing is relevant not only to abandoned mines, but also to operating mines that will close in the future. At the present, mines must eliminate acid production or treat acid drainage indefinitely. It is possible that bond forfeiture may become a realistic economic alternative to the long-term cost of water treatment. In this area, there is need for development of low-cost seals that will maintain their integrity in¬definitely. Other methods such as controlled roof collapse, water diversion, bacterial inhibition, and improved drainage should be investigated to determine their usefulness in reducing the formation of acid in mines that are no longer operating.

Improved prediction of acid drainage potential is being investigated in order to eliminate or minimize the formation of acid mine drainage. At present, core samples are analyzed for total sulfur and potential alkalinity, or tested with slow leaching methods. These procedures have not been correlated with acidity measurements in the field, and they are generally acknowledged as only an approximation of potential acid problems. Available techniques will be tested at sites where the extent of acid production is known, to determine their accuracy and possibly develop modifications. Rapid-leaching tests will also be examined for their applicability to the determination of acid potential. Accurate methods of predicting acid potential of coal and overburden will allow better permitting by State agencies, improved mine planning, and improved reclamation.

In operating mines, the need to treat large volumes of acid drainage is a problem. Currently, lime neutralization with aeration is the standard method of treating acid mine drainage. This method is relatively expensive, requires handling a caustic substance, and produces a sludge with poor settling characteristics. The Bureau has developed and is now improving a treatment system using limestone. Limestone treatment is less expensive, uses a noncaustic material, and produces a denser sludge. Routine use of the system requires the rapid oxidation of ferrous iron at acid or neutral pH. In an initial field study, activated carbon did not have the anticipated catalytic effect on the iron oxidation rate. Presently, studies are underway to determine if iron-oxidizing bacteria grown on activated carbon can increase the rate of iron oxidation in acid mine drainage. A study using bacteria grown on clay particles in a sequencing batch reactor has the same purpose. The development of an effective oxidation step will allow full-scale testing of the limestone treatment process. At present, other methods of treating acid mine drainage are not economically or technically practical. Limestone treatment seems to be one alternative that could be technically feasible and economically superior to lime treatment, although at present it is primarily applicable to waters with a low ferrous iron content. It also has the advantage of being readily adaptable to reclaiming streams affected by drainage from abandoned mines. A passive system using sphagnum moss and limestone will be tested as a means of improving water quality in low-flow streams.

It is apparent that 10 years of mandated water treatment have not produced a significant improvement in overall water quality. Although treatment of acid drainage from active mines prevents further deterioration, the majority of mine water pollution originates from abandoned mines. At present there is a need to develop methods for reclaiming polluted streams and for at-source control of acid production. Research is needed to develop limestone treatment into a practical alternative to lime neutralization. To address these and other acid mine drainage pollution problems, the Bureau of Mines is conducting research in bacterial inhibition to control acid generation, improved water handling to reduce acid load, development of improved mine seals, rapid biological oxidation of ferrous iron, improved sludge disposal practices, low-maintenance treatment of small streams, reclamation techniques to reduce acid drainage formation, and limestone treatment of acid drainage (table 3).

Work in these research areas comprises a comprehensive approach to solving many of the longstanding problems associated with acid mine drainage. Improved treatment methods, at-source control methods, and stream reclamation methods resulting from the Bureau’s research program will make a significant contribution to upgrading water quality in mining areas.