Table of Contents

The fact that over 25% of the original weight of steel liners and balls is discarded as waste is probably one of the factors that has not been considered often. This is particularly true for mill liners, “Mill liners are finished metal products of some precision, they cost two to four times the basic cost of steel shapes, and 25% of the new weight is thrown away when they are worn out”. Ideally, the liners and media should be inexpensive, long lasting, easy to install, easy to remove and should be worn to a very small fraction of the original weight.

Steel wear in grinding is of economic and metallurgical significance, especially when large diameter primary mills are used in grinding of abrasive low grade ores. As the operating costs are escalating with skyrocketing energy costs, the operators are giving increased attention to lowering unit costs. In addition, improved balls, liners and lifters contribute to higher productivity when grinding circuit availability factor is increased.

One of the aims of the minerals processors is to seek and use the possible means to obtain the most economical recoveries and lower the milling costs. Recent studies on the use of improved liner materials and design, special steels for grinding media, improved operating conditions, grinding additives, and rubber liners have shown significant reduction in the steel consumption. However, the use of these methods has been limited to a handful of the concentrators. The purpose of this paper is to review and analyze the factors involved in the wear of steel liners and grinding media and the methods that reduce steel wear with a view to emphasize their importance to the minerals processors.

Factors Involved in Steel Wear

In the tumbling mills (autogenous, semi-autogenous, rod and ball) wear is generally expressed in terms of steel consumption per ton of ore ground or per kWh consumed by the mill. When comparing the wear rate of different ores in different types of mills, unit weight/kWh is considered a better measure. The steel wear or loss is a net result of the loss due to mechanical wear from impact, abrasion, attrition and fatigue, and that due to corrosion. Even though it is difficult to assess the relative contributions of the various factors involved in the wear process, this is essential for the development of remedies or methods of wear reduction. In a tumbling mill, the individual losses due to mechanical wear and corrosion will depend upon some of the following milling system parameters.

Material Composition

- Balls and liner

- Feed

Physical Parameters

- Number and diameter of balls

- Mill diameter

- Mill speed

- Feed and product particle size

- Ore hardness and abrasiveness

- Pulp temperature

- Liners design

Chemical Parameters

- Water analysis

- Pulp pH

- Slurry composition

- Pulp oxidation potential

Mechanical wear is influenced by material composition and physical parameters; whereas, corrosion wear is influenced by all three groups of parameters of the milling system. One of the most difficult tasks is the direct establishment of contribution of corrosion wear. An indirect method of measuring corrosion contribution is to compare steel wear in wet and dry grinding. Metal loss per ton of ore ground is higher in wet grinding than in dry grinding for a typical ore of average work index 13 kWh/t. It is difficult to explain the large increase in the rate of metal loss in wet grinding unless some mechanism other than abrasion is involved in the grinding process. Hence, the mechanism to account for the large increase in metal loss with wet grinding systems is almost certainly corrosion. Recognized authorities attribute 40-90% of the steel consumption during wet grinding to corrosion.

Wet grinding has all the necessary elements needed for an active corrosion process to proceed. These elements are:

- Large surface area (thousands of square meters) of grinding media and mill liner.

- Open circuit corrosion potential of the mineral particles more noble or anodic than that of the grinding steel.

- Abrasion that continually removes the protective film from the grinding steel surface.

Electrochemical measurements of steel in grinding mill environments indicate that corrosion currents exist which are large enough to account for a major proportion of the observed steel consumption in an operating mill. Since the grinding steel is in a very abrasive slurry environment, the determination of corrosion rate for the media is not straightforward. In many cases the ore in the slurry is much harder (indentation hardness) than the steel. The steel surface that is being abraded is composed of an iron oxide layer that forms and protects the steel from further corrosion. If this iron oxide layer is abraded off, an active steel surface remains that is capable of corroding at a rapid rate. It is believed that the large majority of the steel is in the active state, the anodic electrode, because of rapid abrasion. In such cases, the anodic area is the active abraded area of the steel; whereas, the cathodic areas are the passive areas of steel and the minerals in the slurry.

Based on these theoretical considerations many researchers have attempted to develop corrosion inhibitors that can rapidly repair the damaged iron oxide film. In such systems kinetics of inhibition of corrosion film formation is an important consideration, since the corrosive conditions in the mill are in constant touch with the steel.

The overall wear of the steel in the grinding mills occurs as a peeling of the steel surfaces at a constant radial rate per unit time. This concept although challenged at times was first introduced by Prentice in evaluating the wear of grinding balls, and subsequently demonstrated on large ball mills. The radial wear rate of the steel grinding media and liners should be dependent upon hardness and composition of the media, the hardness of the ore, the pressure between the rods which in turn depends upon mill diameter and mill speed.

The mill operator has very little control on some of the parameters of the milling system that affect overall wear rate. However, there are other parameters that allow one to control the wear rate of steel. What follows below is a discussion of some of the methods that allow one to control the wear rate of steel by an appropriate selection of the milling parameters. These methods of steel wear reduction can be easily grouped as follows:

- Improved composition of mill liners

- Improved composition of grinding media

- Improved design of liners

- Improved operating conditions

- Corrosion reducing additives

- Composite material liners

- Rubber liners

Methods of Steel Wear Reduction

Improved Composition of Steel Liners

Primarily three groups of ferrous alloys are being used for the grinding mill liners. These are:

- Martensitic alloy white irons

- Martensitic heat treated alloy steel

- Austenitic alloy steel.

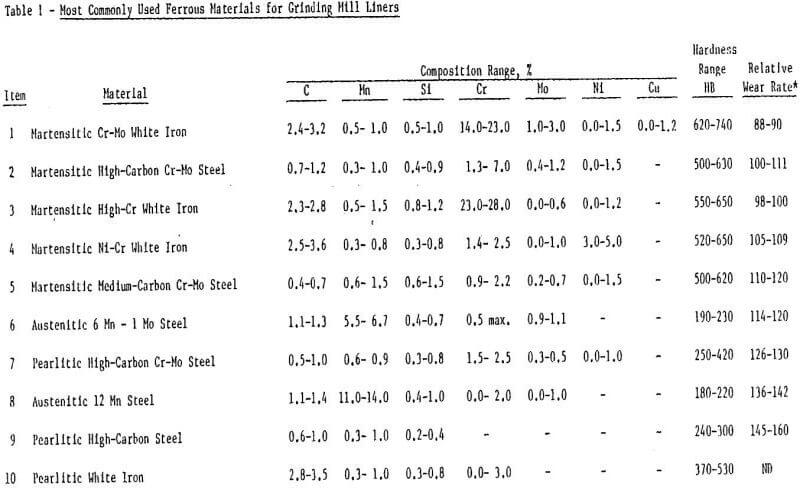

The composition and relevant properites of these alloys are given in Table 1. In recent years a large number of alloy compositions have been produced by varying the contents of carbon, manganese, silicon, chromium, molybednum and nickel. Martensitic alloy white iron such as Ni-Hard is the preferred liner material for resisting cutting abrasion and should be used where impact loading is not so severe as to cause gross impact fractures. If the abrasives are very hard or tough, higher alloy (but more expensive) iron such as 15-3 may need to be employed. When impact is severe, martensitic alloy steels may be used to resist abrasion but they may be subject to gross fatigue failure in the presence of long cycles of heavy loading. Austenitic steels should be employed should both impact and fatigue loading accompany highly abrasive ore grinding.

In particular, the martensitic Cr-Mo white iron, item 1 in Table 1, is basically a range of compositions, containing 14-23% Cr with sufficient Mo plus optional amounts of Ni or Cu so that heavy section liners can be hardened by heat treatment to produce a structure of Cr-Fe carbides in a hard martensitic matrix. This alloy iron has the best abrasion resistance of any of the ferrous materials. It also has good resistance to spalling and breakage in service. However, its use requires careful consideration in mills with high impact conditions.

Improve Composition of Steel Media

Grinding media wear is very much dependent upon manufacturing processes, metal density, alloying elements (C, Mn, Cr, Mo, Cu, Ni and Si), heat treatments, grinding conditions and chemical corrosion environment. A careful control of these factors is essential for production of wear resistant steel media. Significant progress in alloy development for wear resistant grinding media has taken place in the last two decades. Carbon is very effective in increasing hardenability and decreasing wear of grinding media alloys. Norman and Hall report that high carbon martensitic steels are best suited for wear resistance. Depending on the hardenability and impact levels required, carbon content of steel for grinding balls vary from 0.5 to 1.1%; whereas, for grinding rods lower carbon content of 0.6 to 0.8% is used because of more severe heat treatment cooling rate. The other most common alloying element is manganese whose content ranges from 0.2 to 1.6%. Chromium is the third choice of steelmakers as a hardenability agent. Molybdenum and copper are used to suppress pearlite formation and to increase tensile strength and yield strength of the steel, respectively.

At present, over 80% of the grinding media are forged out of carbon and alloy steel; and less than 20% of the media is of cast carbon and alloy steel. A small amount (<3%) of media are of cast Ni-Hard and high chromium cast iron alloy balls, slugs and billets. This situation appears to be changing rapidly. Alloy research has led to more economic low-nickel chill-cast Ni-Hard irons and to the impact resistant white irons. In the production field, automatic molding, permanent molds and automatic casting have lowered production costs. The rising cost of the forging industry appears to make special abrasion resistant cast irons more economical. However, one should remember that metal density controls ball wear rate, and forged balls are generally more dense than cast balls because forging minimizes voids.

Greater acceptance by the milling industry of the cast slug or billet has also spurred the growth of cast iron. The slug is extensively used by ferrous and nonferrous concentrators in Eastern Canada. Newly developed martensitic cast irons have been available which greatly improve the abrasion resistance of slugs and enable them to compete favorably with high carbon forged steel grinding balls. Commercial testing results indicate that martensitic iron slugs reduce the cost per ton milled by 18% and 24% as compared to high Cr cast iron balls and forged steel balls, respectively.

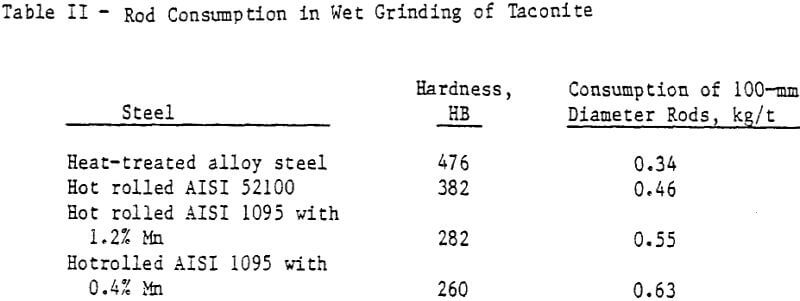

Reserve Mining Company reports that rod wear can be lowered by raising the average volumetric hardness of the rods. Also, their tests showed that grinding rod scrap could be optimized by varying rod alloys and diameters. Table II shows some examples of rod consumption of ferrous alloys of varying hardness values in wet grinding of taconite.

Improved Design of Liners

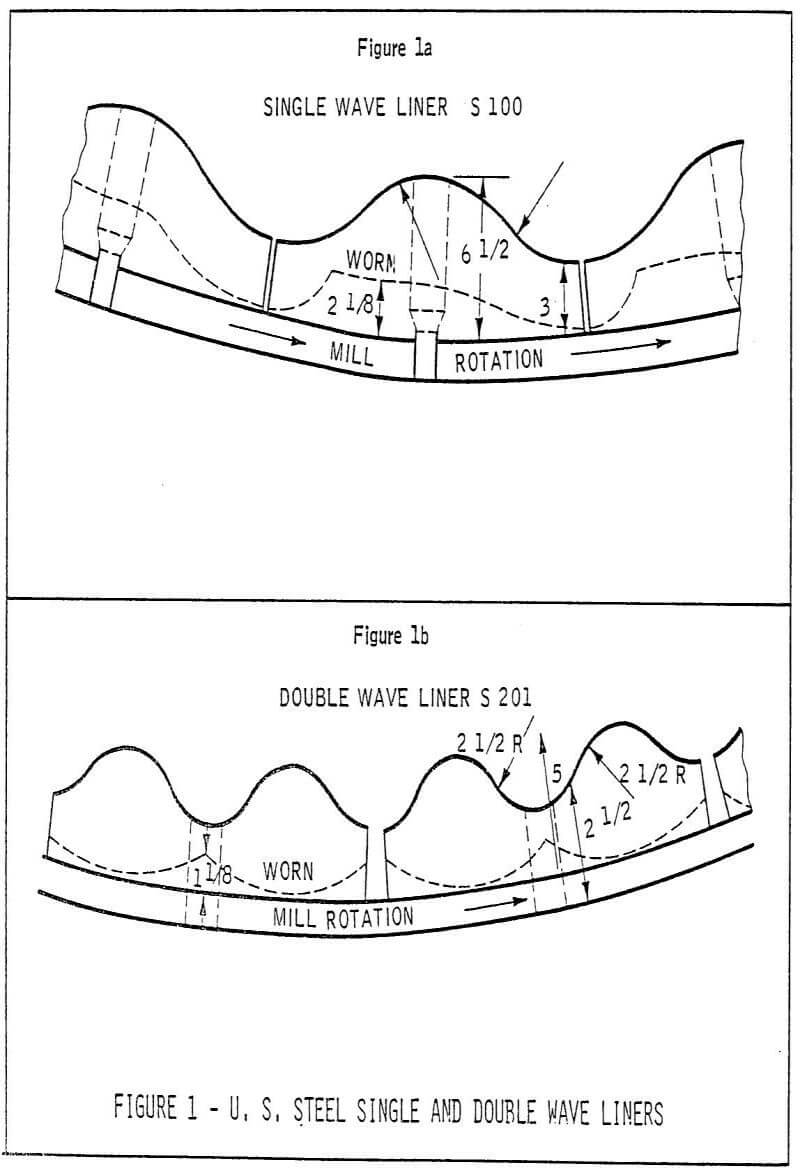

Mill liners are the agents which transmit energy and motion to grinding balls which, in turn crush and grind the ore. The way in which the mill liners tumble the ball charge determines how the balls will grind the ore and the liners themselves wear. Tumbling characteristics of the grinding media is determined by height, slope and radius of curvature of the liner surface. Hence the design of liners becomes an important factor in determining liner wear rate. It has been found that flat or convex liner surfaces tend to reduce rolling friction to a constant value. Concave surfaces of wave valleys increase rolling friction as they approach the size of the ball. Rolling resistance is maximum when valley radius equals ball radius. As rolling resistance increases, the balls roll less and wear rate decreases. Hence, wear rate of the liners decreases when impact of the balls on the liner waves nears perpendicular.

In recent years cast liners have been gaining an edge over the rolled liners. This is because of the ease with which design and casting pattern modifications can be made in casting without prohibitive expense.

Some work shows that a design change, involving wave height and spacing, shifted liner wear from 228 kg/day/100 sq meter to 208 kg/day/100 sq meter. Further he reported that replacing 6-1 manganese steel by chrome- moly cast steel, he was able to decrease the wear rate to 126 kg. This infers that a suitable combination of design and alloy composition is required to minimize the liner wear.

Lornex concentrator was able to increase the grinding circuit availability from 85-88% to over 94% by employing modifications to liners, lifters and grates in a semi-autogenous mill.Typical of these improvements was the development of two piece wedge bars for the shell. By redesigning the wedge in a two piece cap and base form, the foundry achieved two advantages: first, Lornex had less throwaway metal because the base is replaced less frequently; second, the foundry can do a better heat treating job of the two pieces, and thus achieve longer life for the cap.

A similar improvement was achieved in the discharge head to reduce grate wear that was confined to one specific area. By a special design of the split in the grate, only one piece needed replacement at the original rate. This avoided the wastage caused by throwing the entire grate away every time.

Another successful improvement was caused by redistribution of shell liner weight. Since the wear of liners developed in the center, the liners were built with greater convex form at the center. This increased liner life by 30-40%.

In an attempt to optimize liner design, Climax experimented on their 4.0 x 3.6 meter overflow ball mill with various designs of liners. The findings showed that the more abrupt and skid-resistant the liner design, the lower was the metal wear. A reduction of 28% in wear rate accompanied the change from S-100 single wave (Figure 1A) to S-201 double wave (Figure 1B) liners made from the same alloy steel. It should be noted that this design produced poor tonnage. Since the primary goal in a milling operation is to produce maximum mill tonnage, in such cases, mill operating conditions should be examined to improve mill tonnage and reduce wear.

Based upon extensive experimentation on large diameter ball mills at Climax, another liner design modification was developed. The thrust of this work was to control wave migration. Dunn of Climax recommends control of liner design so that wave peaks end up centered on liner bolt holes. The counter-sunk bolt heads then remain in heavy sections and liners remain firmly bolted until they are worn clear through. By doing so, although the wear rate does not change, metal use efficiency increases when liner scrap weight is reduced and liner cost per ton ore ground is minimized.

Improve Mill Operating Conditions

In practice, operating conditions of the grinding mills are optimized for maximum ore throughput. However, under certain conditions, maximum ore throughput and minimum steel wear do not go together is demonstrated in the following example.

At the Minntac operation when rod mill feed rates were increased from 300 to 400 tph in 4.2 x 6.1 meter mills, shell liner life was reduced from 2.7 million tons per set to 2.3 million tons per set. When the wave section of these liners was increased by one inch in height, the shell liner life was returned to a normal wear level and the tonnage per set increased to 2.54 million.

Windolph has shown that liner design and mill speed and mill capacity must all be considered together to gain optimum lift of the media which decreases liner wear from a high into medium category.

Large diameter mills emphasize liner economics because of the high expense of shut down time for relining the mills and for the relatively high cost of the large quantities of liners needed for relining. In the 6.7 x 7.9 meter autogenous mills, Swanson found that maintaining the lift bars in good condition very much reduced the wear on liner plates.

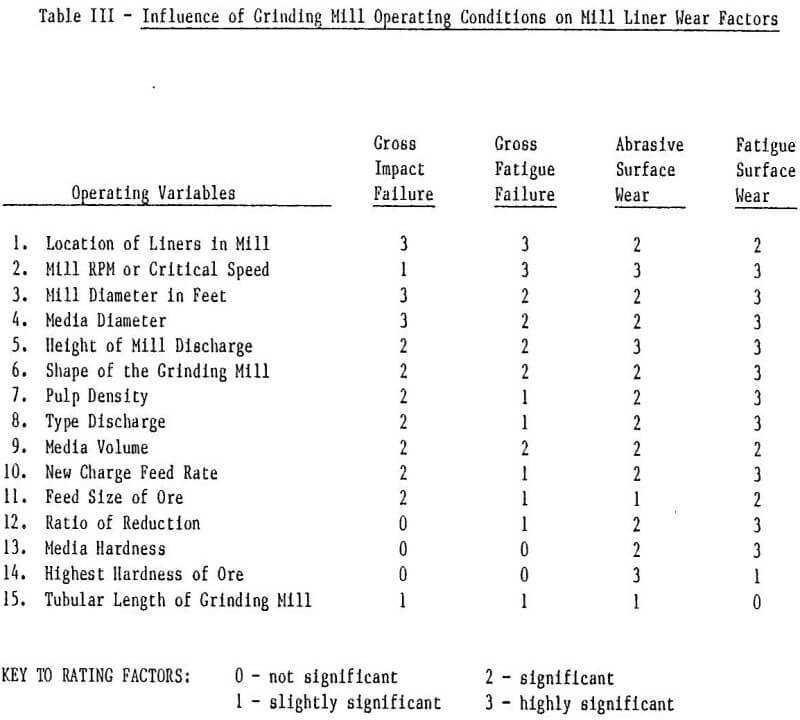

Historical data shows that liner wear can be significantly decreased by operating the mills under low mechanical wear factors (gross impact, gross fatigue, abrasive, surface fatigue). The relationship between operating conditions and mechanical wear factors is presented in Table III which numerically rates each of the 15 operating variables influence on each of the four wear factors. By careful examination of Table III, Rosenblatt states, an operator can determine what steps to take when the liner wear in the mill has become excessive, and that not much improvement is obtained by changing liner materials. When the wear is high (over 10 kg/10 meter²/day) an attempt should be made to shift the wear to medium category. This is achieved by systematically examining the 15 operating variables to determine if one may be subject to possible modification. If a successful change is made, the choice of optimum material should be considered for further improvement.

Reagent to Help Reduce Steel Consumption

Among the methods to prevent/reduce corrosion—metallic coatings, organic coatings, cathodic protection, treatment of medium, and addition of inhibitors—only the use of inhibitors appears to be the most suitable for grinding mills. The use of coatings and treatment of the medium in the mill is not possible because of the severe impact and abrasive conditions in the mill.

Several investigators have shown that corrosion inhibitors sodium nitrite, sodium chromate and sodium metasilicate reduced the wear of forged steel grinding balls by up to 49% in grinding of various types of ores. Although the exact mechanism of wear reduction is not known, these studies conclude that corrosion must play a significant role in the wear of grinding media. One of the explanations of this phenomenon is that an efficient anodic inhibitor prevents corrosion of steel by forming a protective film on the surface. The film passivates steel, causing its potential to shift to the noble direction.

The contribution of corrosion wear to overall wear of mill liners has not been studied as much as that for balls, except for laboratory studies on discs, and plates. In a study by Wieser et al abrasion-corrosion of various stainless steel discs rotated in alumina slurries showed that the combined effect of abrasion and corrosion is greater than the sum of each occurring separately. Hence, corrosion control becomes essential for reducing the overall wear of steel.

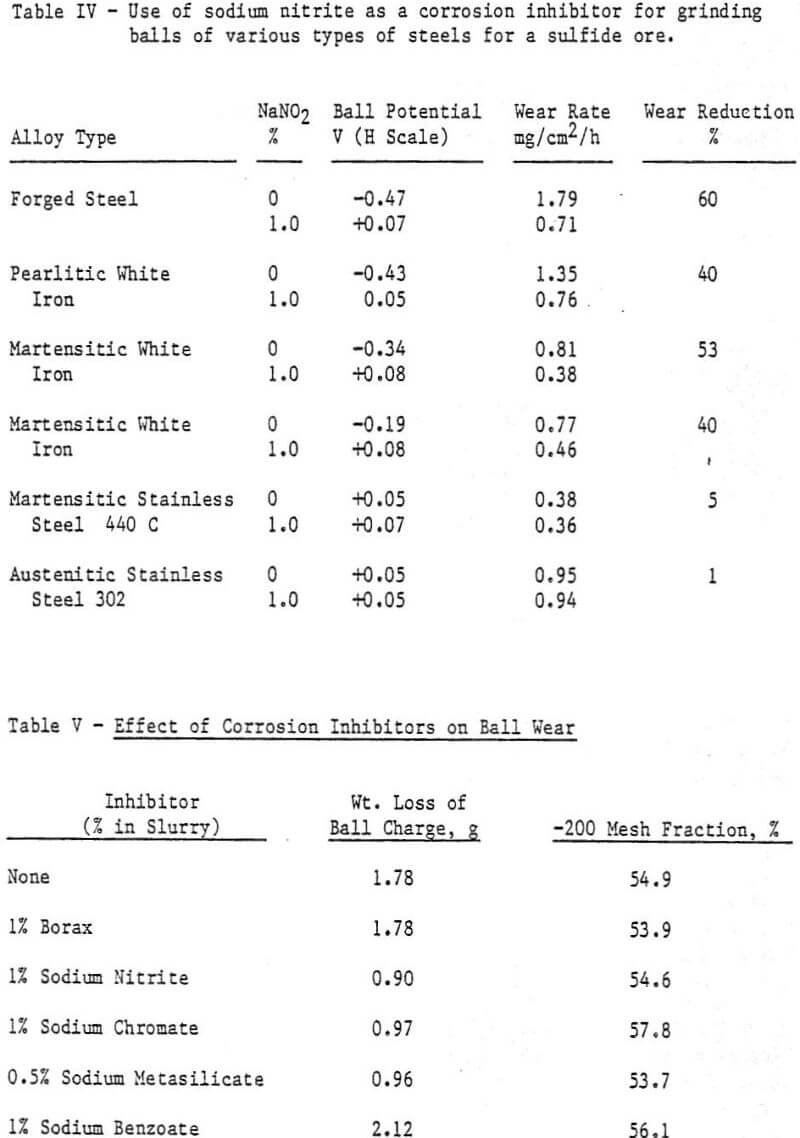

In a sulfide ore grinding study six alloys examined for corrosion inhibition with and without the addition of sodium nitrite showed the following:

- Forged steel and white cast iron grinding balls are susceptible to corrosive wear, which is reduced substantially by corrosion inhibitition (See Table IV).

- Stainless steels are relatively immune to corrosive wear.

- Corrosion inhibitors cause ball potentials to shift in the noble direction. The magnitude of the potential shift is related to the reduction of wear caused by sodium nitrite.

Similar results were observed on a hematite ore.

Using the well documented corrosion inhibitors, Hoey et. al. showed on a Cu-Ni ore that different inhibitors perform differently. Table V shows that sodium nitrite, sodium chromate, sodium metasilicate are effective; whereas, sodium benzoate usage resulted in an increase of wear on forged steel balls. It is worth noting that sodium chromate affected grinding work by increasing the -200 mesh material.

In the same study the influence of pH for corrosion inhibition was identified. Without sodium nitrite, ball wear decreased by 49% in the pH range 11.0 – 13.7; whereas, with 1% sodium nitrite, a 49% reduction of wear was observed at pH 10.0 – 11.0. Also, sodium nitrite did not reduce wear at initial pH = 9. Thus, there is a critical pH where sodium nitrite becomes effective. Similarly there is a critical dosage at which sodium nitrite was able to influence steel wear.

The dosage required for corrosion inhibition are higher than that for dispersion of slimes. The following example illustrates the comparative dosages. Alkaline silicates are used to disperse gangue slimes, to assist recovery, to produce brittle froth, and to depress quartz and silicates in varying dosages from 0.2 – 2.0 kg/t of ore. The minimum dosages of the corrosion inhibitors required are over 3.0 kg/t of ore. Hence these reagents become effective only at much higher than normal dosages. However, the actual amount required for a particular ore would probably be different. For example, in a sulfide concentrator, addition of lime to the pyrrhotite regrind mill reduced ball consumption by 40%. The lime was added at 0.6 kg/t of ore which changed the feed pH = 8.2 to discharge pH = 9.1.

Composite Materials for Ball Mill Liners

The design of composite materials for wear resistant service aims at combining two or more materials in such a way as to achieve unique and useful properties not generally available in any single homogenous material. The new generation of composite materials constitute metallic, and part metallic that are suitable for use in the high abrasion/moderate impact environment.

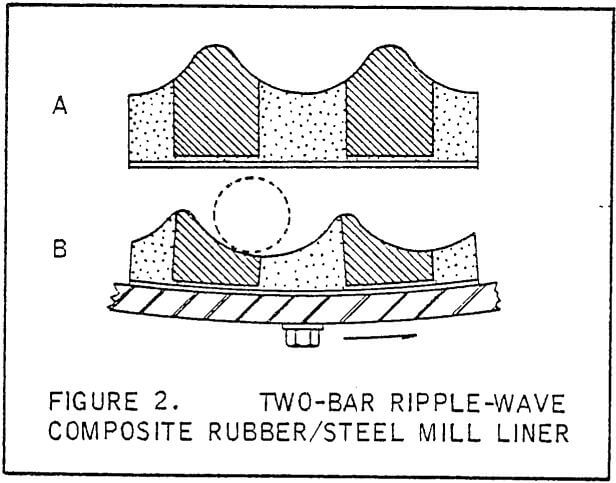

A recent development in this area involves rubber and metal composite where a contoured composite shell liner suitable for either ball or rod mills has been designed. Mill liner design experts agree that tumbling mill shell liners with multiple wave contour provide the optimum performance. The distance between wave crests, the height of each wave and radius of valley between each wave crest are important for grinding efficiency and wear rate of liners. Ideally it would be desirable to design liners which retain the optimum wave contour for the entire life. However, in practice, waves tend to flatten out. The new hope is that if the liner is made of two materials having different abrasion resistance, whereby the more abrasion resistant material is used to form the crests and the less abrasion resistance material is used to form the valleys. This should help to retain permanent waves in the liner contour. In primary grinding mills where rubber liners wear fairly rapidly, it would be feasible to use a highly abrasion resistant alloy iron or steel for the bars forming the wave crests and pressure mold these into less abrasion resistant matrix to form the vallyes in the composite assembly (See Figure 2A).

This design concept was further improved by Norman who patented the “Ripple-Wave” liners (Figure 2B). The ripple wave liner was “patch” tested in a large operating ball mill in Arizona, and the next step is to test the permanent-wave type of Ripple-wave liner in suitable ball and rod mills. In the long range development of these liners, several alloy composites should be evaluated for cast bars, and the design should be optimized.

Additional benefit of economic significance of such composite mill liners is the expected 5% increase in grinding efficiency. The initial cost of composite liner is reported to be comparable to a cast Cr-Mo steel liner.

Rubber Liners

Swedish mineral processors have been the leaders since the 1920’s in the use of rubber liners. The good abrasion resistance of rubber liners depends on its ability to yield elastically without being cut or scratched when an abrasive particle impinges upon it. Consequently, rubber liners tend to provide the best service when the abrasive particles are small and relatively free from hard, sharp edges. Extensive research has continued to expand the range of operating conditions. By development of superior rubber formulations, improvements in design and fastening methods, rubber liners can now be used successfully and economically in fine and coarse grinding operations.

The rubber formulations used for these liners are proprietary and these formulations are normally designed to suit operating conditions in a particular mill. Since specific gravity of rubber is 1/6 that of steel, the weight of rubber liners decrease considerably. As a result, handling and down time have considerably decreased. Boliden reports 50% reduction in liner cost, 2½ to 3 times faster installation and no loss of grindability by using the rubber liners.

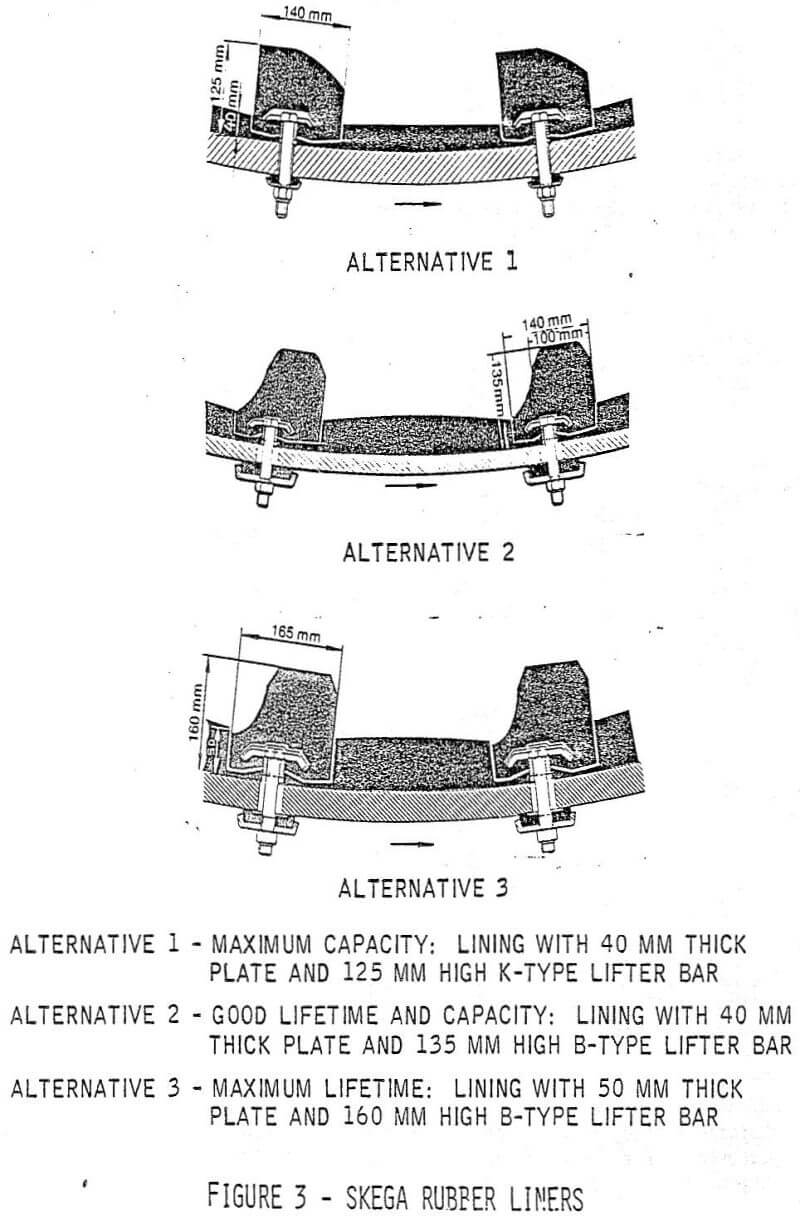

Skega designs rubber liners for three alternatives:

- Maximum capacity

- Good lifetime capacity

- Maximum life span

The basic design features that impart different qualities are the thickness of plate and design of lifter bar. (See Figure 3)

With the introduction of autogenous grinding new rubber qualities and designs have evolved to withstand the tremendous wear to which the lining is exposed. When first introduced in 1967 in Boliden the rubber lining was not economical. However, the continuous development work has gradually improved the life span, and from 1972, Skega lining has given better lining economy than that of steel. Fahlstrom states that Boliden’s experience with rubber liners in autogenous mills is very satisfactory at a very low liner cost and noise level.

Similar results have been reported with ball and rod mills. Rod mills require special design of head liners to avoid disturbances in the tumbling of the rods. Since smooth liners have a high rate of wear and the adverse effects of rod spearing on the head, head linings of rubber have so far not been installed.

Trelleborg is another producer of rubber liners for ball, rod, autogenous and pebble mills. The liners design and rubber compositions appear to be different from those of Skega. This type of competition should stimulate development of improved designs and materials.

Conclusions

This study on the wear of steel in the grinding mills has lead to the following general conclusions:

- The loss of steel due to wear in grinding mills represents a large portion of the operating cost. It is expected that the steel wear loss will continue to increase.

- The overall wear rate of steel is a function of abrasion, impact, fatigue and corrosion. Hence the overall wear rate is governed by the material characteristics, physical parameters of the milling system and chemical parameters of the ore, water and steel. Corrosion wear appears to be responsible for 40-90% of the total steel loss.

- Several methods for reducing steel wear have been identified. Different parts of the world use different methods to reduce steel wear. The mill operator has to study his specific problem and choose the methods most suited for his application.

- In considering the overall trends in the selection of liner materials for reducing the steel wear, the steadily increasing labor rates for liner production and installation have narrowed the difference in cost among various candidate materials. Consequently, the trend in selecting materials for liners have been toward those which provide best service life even though their cost may be a little higher than for a material with less wear resistance. This trend may continue. There is a large scope for cooperative efforts of producers and users of liners, to improve both design and quality of material.

The grinding media industry has developed a variety of materials to satisfy individual needs. Grinding media wear is very dependent upon manufacturing processes, grinding conditions and chemical corrosion. Cast steel grinding media appears to be evolving rapidly, and its usage is bound to increase.

The grinding media industry has developed a variety of materials to satisfy individual needs. Grinding media wear is very dependent upon manufacturing processes, grinding conditions and chemical corrosion. Cast steel grinding media appears to be evolving rapidly, and its usage is bound to increase.- Modification of the liner design should be considered if it will maintain the integrity of the wave throughout the life of the liners, thereby lowering wear rates. The technical data necessary for making an optimum choice of the liner design is available, and it should be put to use.

- Because the highest milling efficiency and the least liner wear usually do not go together, mill operators must examine the operating conditions that are directly responsible for increased liner wear.

- Corrosive wear of steel is decreased by the use of certain corrosion inhibitors and pH control without affecting slurry characteristics. Since the mining industry uses some of these corrosion inhibitors, their use should be optimized for reducing steel wear.

- Composite materials, rubber, and other elastomers have been successfully used for design of liners for small and large diameter mills with great success. There are a large number of polymers used by the rubber industry, each having different properties. The methods of rubber liner design enable one to choose the rubber lining parameters to suit the operating conditions and at the least cost.

- Traditionally the mining industry has not given too much attention to decreasing the steel wear in the grinding mills. With the continued development of improved materials, designs, and operating conditions, it is possible today to decrease the steel wear. Successful application of the methods described above and those under development in the industry should be able to realize substantial savings in the operating costs.